You don’t know what you don’t know. It’s as true in Claris FileMaker as it is in life. As Claris FileMaker developers, we strive to create workflows in our solutions that enable data to tell accurate, insightful, and succinct stories. Even if the process of data entry is disjointed, spanning multiple tables, users, and periods of time, we want to make sure to preserve the integrity of the entered information. Even if the data requires several stages of analysis and summary in order to tell its story meaningfully, we aim to make sure that this work is done out of sight, so that a user never notices how hard the solution is working to compile the complex report they want to generate.

The Power of Optimization

So we optimize. This is done using any number of techniques: storing aggregate totals in a number field instead of using fields to recalculate totals in real time, syncing data between tables instead of summarizing it across relationships, archiving older or seldom-accessed records in order to focus processing power on just recent and relevant records. These optimizations allow users to perform finds almost instantly, generate comprehensive financial statements in seconds, and perform other complex workflows inherent to the users’ business – all without having to change the users’ preferred workflow or requiring them to enter the same data multiple times.

When these optimized processes work their magic as expected, it is glorious. It is satisfying. It is remarkable.

Unforeseen Challenges and the Need for Verification

But what if one of these optimizations doesn’t work as intended once or twice? What if a user’s power or internet cuts out while submitting entered data? What if a guest developer adds a Commit Record script step in the middle of a data processing workflow, not realizing that the process is intended to be transactional until the final Commit or Revert? What if something else happens that you didn’t even consider a possibility, interfering with one or more of those super clever workflows and compromising the integrity of the solution’s data?

Enter scheduled verification scripts.

Verify That

Scheduled verification scripts are a series of scripts which check critical information about records or environmental factors against a control. These scripts run as subscripts of a parent script, which collects the results of each verification, and determines whether any errors have been detected. If there are errors to report, it notifies the relevant stakeholders and developers by text, email, or Slack of the issues detected. If no issues are detected, it sends the same stakeholders and developers a notification on predetermined dates, letting them know that the verification process is running as expected and no errors have been identified.

Addressing Behavioral Changes and Unknown Factors

You might be thinking, “If I write bulletproof code and test it thoroughly enough, it will always work as expected, and I would never need to double check the data. Why do I need to do this?” Your confidence is likely well-placed, and your code bulletproof. But even if you account for every possible set of circumstances within the current and previous versions of FileMaker, you can’t account for behavioral differences in future releases (FileMaker 18 vs. FileMaker 19 behavior change regarding the interpretation of slashes in the FMP URL, anyone?). Although stark behavioral changes in new releases don’t stick around for too long, they do happen for some period of time, and if that change affects the way your scripts process data, you’ll want to know as soon as the data is affected.

Determining the Control for Verification

What you decide to verify will depend on the types of data and methods of data processing found in your solution, but determining your control against which to verify the aforementioned data is more universal. Your control will always be the piece of static data or measure of change over time that would signal to you that something is wrong when compared to the object of verification. For example, if a script in your solution updates a static number field which represents the total dollar amount of all open invoices, your control would be the sum of the outstanding amount for invoice records marked as open. Another example might be comparing the sum of debits against the sum of credits posted to the general ledger in a given month. The control in this example is understanding the tenet of accounting which requires the sum of posted debits and the sum of posted credits to be equal for every transaction, and understanding that entries in a given transaction cannot have different posting dates. Given these requirements, if the sum of debits does not equal the sum of credits for a particular month, it signals an error in the posting process or that the ledger has been edited manually by an administrator. Essentially, you want to identify the red flag which would alert you that something is wrong with the data you’re verifying. Then, create a loop script which checks for that red flag for every record that needs verification.

Application in Genesis Accounting and Other Workflows

Codence’s accounting product, Genesis Accounting, leverages the stored total technique in which the process of posting an invoice or applying a customer payment updates various stored totals at the time of posting. These stored totals are used to generate top-level reports and statements in seconds even when there are hundreds of thousands of records in the General Ledger. The same reports would take minutes to generate (and, in some cases, hours to generate, depending on the number of records being summarized, where the solution is hosted, and the internet speed of the user) if these totals were calculated in real time using unstored calculation fields, button bar calculations, summary fields, or looping scripts.

The stored total technique works wonderfully, but it’s only worthwhile if the veracity of the totals is maintained. The core code employs a verification process, scheduled to run nightly on the server, and emails the designated stakeholders if there are any discrepancies between the stored total for a given account and the control: the total of actual general ledger records for the same account.

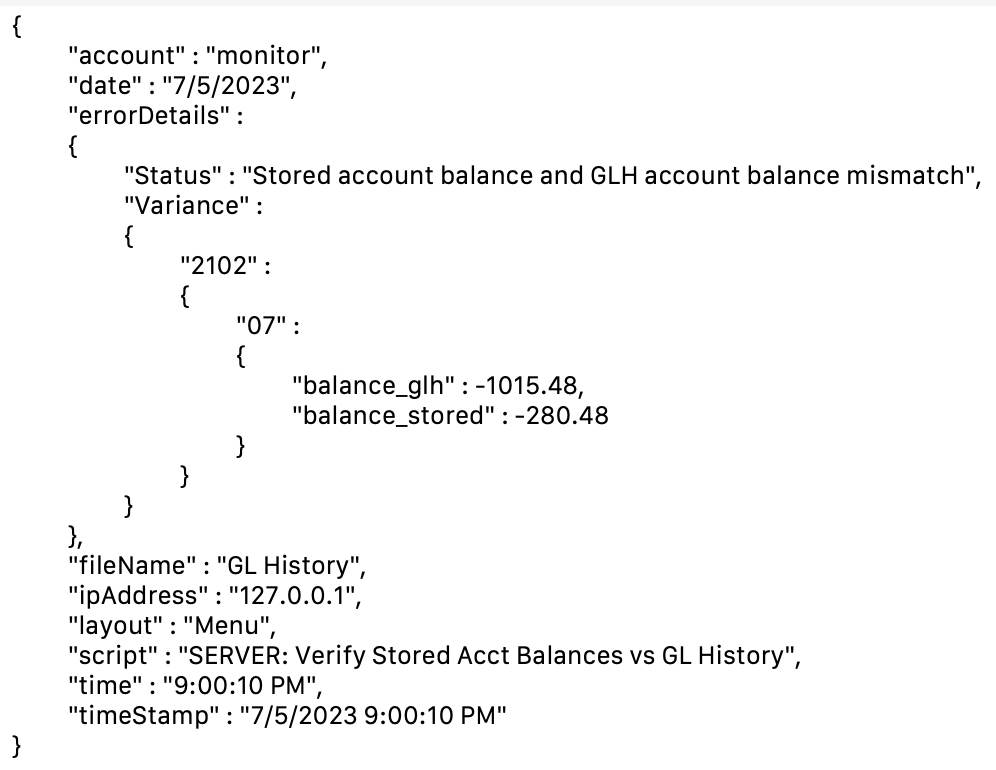

In the event of a discrepancy, the accounting period, account number, stored total, and actual total get stored in a JSON object, which gets logged as an error, and also gets returned to the parent script as a script result. Because the discrepancy data is stored in a JSON object, the information collected about the circumstances of the error can be as detailed as you want.

The parent script can call as many verification subscripts as you choose to include in the nightly routine. The verification check for Genesis Accounting, for example, runs four subscripts, which check

- the stored account totals against the actual balance for each account

- the sum of debits equals the sum of credits for each month in the open accounting period

- the stored outstanding balance for AP against the sum of the outstanding balance for unpaid payables

- the stored outstanding balance for AR against the sum of the outstanding balance for unpaid receivables

If the stored totals being verified are in a different table than the records representing the control amount, it’s also a good idea to perform a bidirectional check to make sure that the stored total table doesn’t have errant entries. For instance, a separate table which stores stored account totals may have records for accounts that are not present in the actual GL History data. While the stored total workflow should already have a process to “reset” or delete stored total records to match the actual data, they may still be there for some reason (the process never finished running because a user force quit, the server got reset) and they may show up on reports. A bidirectional verification will catch any records with totals which no longer match the control data, and alert you or the stakeholder that a reset needs to get performed.

Another example of the benefits of a verification script with notifications is comparing stored inventory totals against expected or actual inventory totals. In a custom system for one of our clients, Jody Barney, a very talented Codence developer, is running this check for many reasons, the foremost being that the client’s solution is made up of many files, multiple developers, and various processes to which her team does not have access and about which her team may not know. Still, they are responsible for delivering accurate inventory counts or calling out when the counts are not correct, even though they may not have control over the processes which set these counts. Jody describes in detail the way her team uses error trapping for inventory counts and an integration with Slack to alert a client if recorded inventory totals for particular products were outside of the acceptable variance when compared to the expected inventory totals for that product line.

There are many great candidates for verification, and the person most familiar with the inner workings of the solution in question will be able to determine which processes could use a double-check, but in general, the most eligible workflows are those which involve

- stored data which represents a summary of other records

- data which must adhere to external rules or structures (such as accounting)

- syncing based on certain conditions

- archiving based on certain conditions

Error Notification and Customization

If any verification subscripts return a script result with discrepancy information, the parent script sends an email to the chosen stakeholders, letting them know about the errors which occurred, organized by the type of verification performed and the JSON detail with the error context. Alternatively, the email body could contain one or more log record IDs for each verification process that returned an error or a link to open the solution and navigate to the error log with the details.

The type of notification can be customized as well. The verification script can alert the stakeholder by sending a text notification via email to the stakeholders’ cell phones using their phone carrier’s email address (for example, using a Verizon customer’s cell phone number in conjunction with “@vtext.com” will deliver a text to their cell phone). Or, if your organization uses Slack most often, the alerts could be integrated with and delivered to a Slack channel. Codence’s own integration whiz, Charlie Bailey, put together a great guide to integrating the output from your FileMaker solution into Slack.

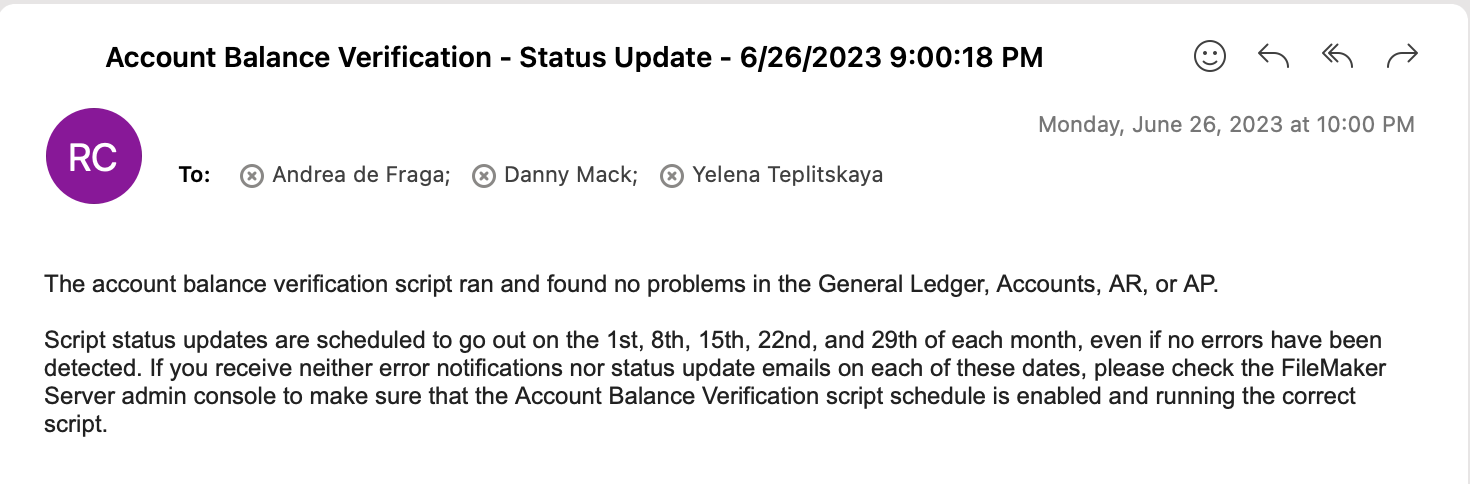

Confirmation and Server Status Checks

The most surprisingly satisfying aspect of the verification routine, though, is that it sends a notification to the stakeholders confirming that the process has run and has not found any errors. While this may seem unnecessary, it’s actually a great way to check that the verification routine is still enabled and running on FileMaker Server. The emails confirming that no errors were detected certainly don’t need to go out nightly. Instead, you can determine the dates when the “no errors” email goes out to the stakeholders, and you may even include this information in the body of the confirmation email. This way, if the script schedule ever gets disabled by mistake, the stakeholders can easily reference an earlier email to see when they should expect a status update email.

Proactive Data Integrity

Verifying that processes performed as expected can be a relatively straightforward way to provide additional peace of mind to you, your clients, your bosses, and any other stakeholders who have a vested interest in the integrity of a solution’s data and its internal mechanisms. If the verification routine discovers any errors, you can rest assured that you were proactive in finding the error before it affected the system’s users and drove decision-making based on incorrect data.

Of course, knowing that an error has occurred doesn’t automatically mean you’ll know how to fix it. But this way, at least, you’ll know what you don’t know – and that gives you back your edge.

Built with you in mind

Speak to one of our expert consultants about making sense of your data today. During

this free consultation, we'll address your questions, learn more about your business, and

make some immediate recommendations.